Startups Mistakes That Cost: Wrong Turns That Burn Millions

Having worked with over 30 startups across multiple verticals over the years, I’ve been progressively more curious about where these businesses go wrong in their infancy, and why they fail to reach maturity. What are these hurdles and why can they be fatal? Things can (and often do) go awry anywhere along the multiple plate spinning of legal, investor relations, HR, financial management and other key areas. However I’m going to focus mostly on the growth side of this equation; as I’m more qualified to focus on where I spend most of my time learning, experimenting and supporting these businesses; cost-effectively acquiring new customers. For reference; whether I’m working as a part-time CMO or interim head of growth, I most commonly encounter two main types of businesses:

-

Heavy seed-level funded independent startups

-

Innovation projects/incubators inside more mature businesses

Although potentially different in structure, team and culture, from experience they can be markedly similar in where they commonly go wrong. In this article, I will present the common problems, ideas about solutions, and a process mapping technique I develop and use – with some tangible learnings to share. Using the experience at a recent startup, I underwent a mapping process of their status and analysed what they thought was going wrong. I will elaborate on the process I underwent with a client case study example of a B2C focused mobile app startup in the home maintenance space. They were an early-stage innovation project taking heavy seed investment from their corporate investors.

Their challenge

In their first 3 months, I helped them go from 0 to $25k MRR (Monthly Recurring Revenue) - a very humble but reassuring beginning. However there were still big challenges that prevented them from scalably and cost-effectively growing. I was asked to join as part time marketing director working closely with their existing founding team. At this stage; I’d like to highlight – nobody really wants to call in an external consultant! Consultants are seen as an issue for 3 reasons:

-

They are a cost, and if a startup isn’t scalably growing that’s just an additional expense they really don’t need

-

Consultants aren’t aligned with the incentives that equity holders have. This usually happens because of traditional commercial models which don’t work to align interests – frequently this is a fixed day rate tied to time, which doesn’t make too much sense for knowledge workers.

-

It is perceived as a sign of weakness or failure. Everybody wants to (psychologically speaking) be independent and self-sufficient. To compound this, growing businesses are happy to take on more talent. However, a struggling business (startup or otherwise) is a tense environment; not particularly fruitful for creativity or innovation.

Regardless, their CEO set out the following objectives:

-

Growth – To generate 20% monthly increase in mobile app usage from new users

-

Focus - To help him answer on “What should we prioritise?” And conversely, “what can we afford not to focus on?” and helping him define: “What does good look like?”

-

Brand - To support the realignment of their positioning to match their value proposition to the needs of their target audience.

My approach

So when I get involved with a startup, particularly as an outsourced CMO, I generally approach with three objectives:

-

Understand their current pain-points - information gathering exercise to understand and prioritise areas to focus on fixing.

-

Map out their customer journeys - understand the customer, their needs and analyse how these are being solved.

In order to: 3. Generate actionable insights and recommendations - Formulate a prioritised, scalable pipeline of growth marketing opportunities.

1. Understand the current pain-points

This is an information gathering exercise. It is an important stage, particularly to avoid jumping to immediately focus on how you would fix things until you have all information first.

-

Speak to all existing cross-functional teams (critically; marketing, product, finance, tech, operations, customer service)

-

Listen engaged to their replies and ask lots of questions internally - challenge their responses in your head.

-

Crucially, identify the common themes, the range in responses and any contradictory information.

You should document responses in a format that works for you, in order to later to build out your own thoughts. You can see a subset of the key questions I frequently ask below:

Key questions that I commonly ask

-

Customer need state

How has the customer’s need being identified and captured? How is your solution matching to solving the customer’s need?

-

Customer journey

How much energy do users need to exert to get their need met?

-

Product/service USP

How differentiated is the product from current choice of alternatives available to user? It is important to approach objectively and avoid confirmation bias. Despite this, be mindful that you may need to qualify and weight the responses as that not all answers are equal to these abstract questions. The main reasons for this are: 1. Different interpretations of language between tech, finance, product, design and HR (e.g. shared definitions on when a “user” becomes a “customer”) 2. The required level of experience to interpret them in a meaningful way 3. Most importantly; how to execute on their answers in practice By way of an example: I asked key team members “What do you think are the reasons product isn’t working?” and here are some of their answers:

Team member A:

-

Messaging isn’t clear on product benefits

-

Missing cues on what to do next from mobile-app landing page

-

Need to educate customers / wrong customers

Team member B:

-

Not explained well what it is what we do

-

We are (not) anchoring the USP in the user’s mind

-

Our USP isn’t clear

-

Identifying and extracting needs from customers isn’t done clearly

-

Lack of understanding of the drivers behind customer behaviours

-

Not tapping into the customer’s need state

It is clear Member B understood the problem - Member A (a competent and smart professional) believed it is possible to educate customers (to do something new), which may be viable but I’ve not seen it yet.

The role of using historic data

Whilst data gathering is crucial to understand why things aren’t working; I often experience that startups neither have established reliable data collection, nor solutions that are reliably implemented. This results in inconsistent, incomplete, limited or unreliable data sources to straw insights from. Invariably, part of my recommendations will focus on ways to improve their data collection. When available, I focus on two main areas:

-

Are you driving traffic and it isn’t converting?

-

How far down the funnel do people drop off?

From this process, I was able to start to build an understanding of their current pain-points. Namely:

-

Their differentiator is unclear and perhaps not relevant to addressing the user’s needs

-

Users do not understand how their new service works

-

Not addressing enough users who are in genuine need of their service

2. Mapping out their user journey

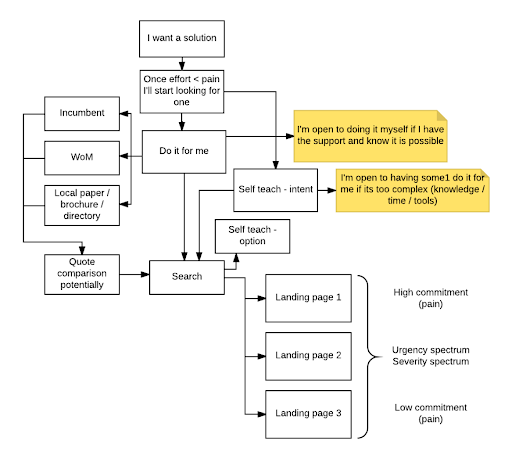

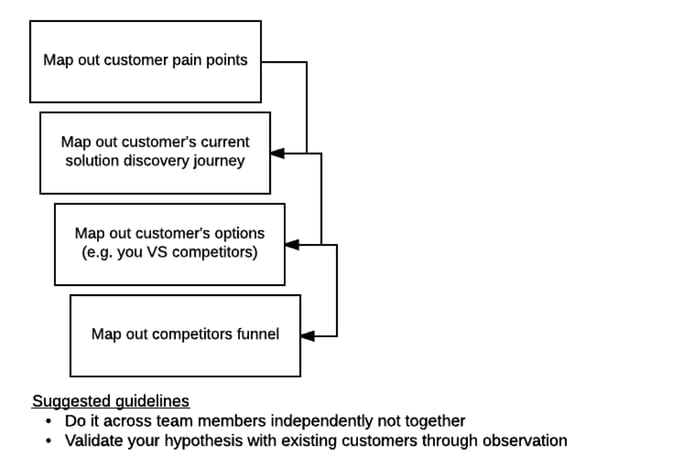

The objective here is to identify their existing and preferred user journeys and identify where and importantly, why isn’t it working. A common mistake here is the hyper-focus on own product vs. customer journey. Most frequently I am showed the funnel as the business sees it. However it’s more worthwhile to map out the journey as the customer sees it, as you may imagine, this process can be pretty complex. So when I approach as an outsourced marketing director, I generally try to use the the following model, documenting using free tools like Lucid Chart in order to map out the user journey as I understand / predict it. The model is as follows:

Here’s what an example result looks like (unfortunately I can’t paste the whole process):

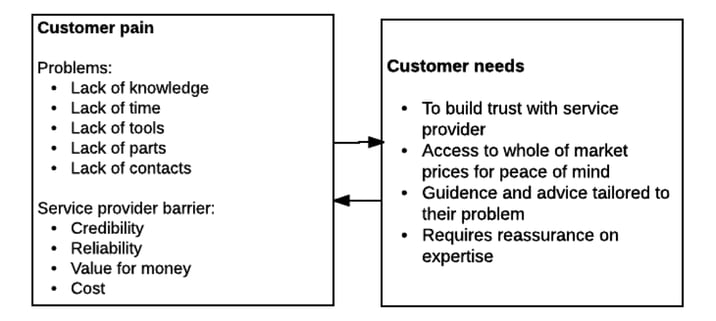

Mapping out customer pain points

Mapping out customer discovery journey

Competitive analysis

Something surprising that I keep finding across clients is the lack of competitive insight. There’s a few key tools I use for this:

-

Similarweb Pro for understanding the traffic sources from different channels and organic keyword discovery, referral discovery

-

SWOT based methodology built around the foundation of SOSTAC, a great digital marketing framework

-

Advance web ranking / SEMRUSH (depending on whether international or not, for more volume I use AWR) for keyword ranking assessment on search (paid + organic)

-

Whatsrunswhere to assess display

-

Competitor product testing and documentation

The sum of all the parts

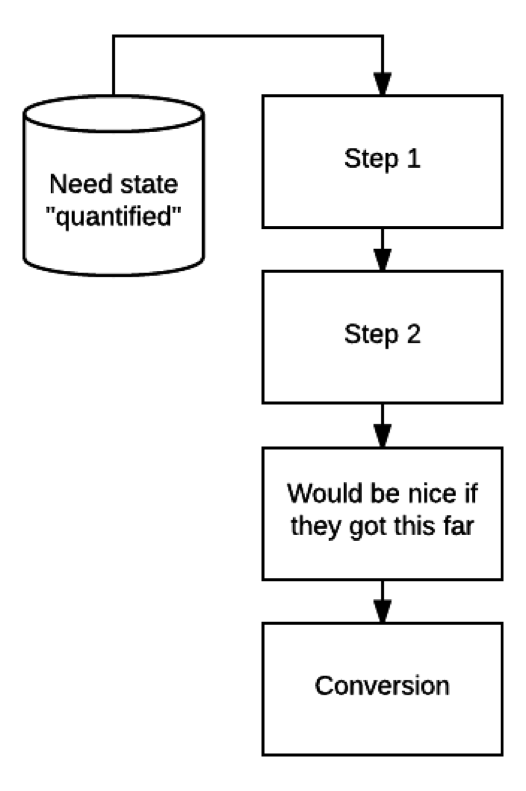

A common misunderstanding is that drop-off in on a specific stage in the designated funnel means that simply that a single step needs to be improved. Think of each step in your process requires energy - literally (glucose) from your prospect.

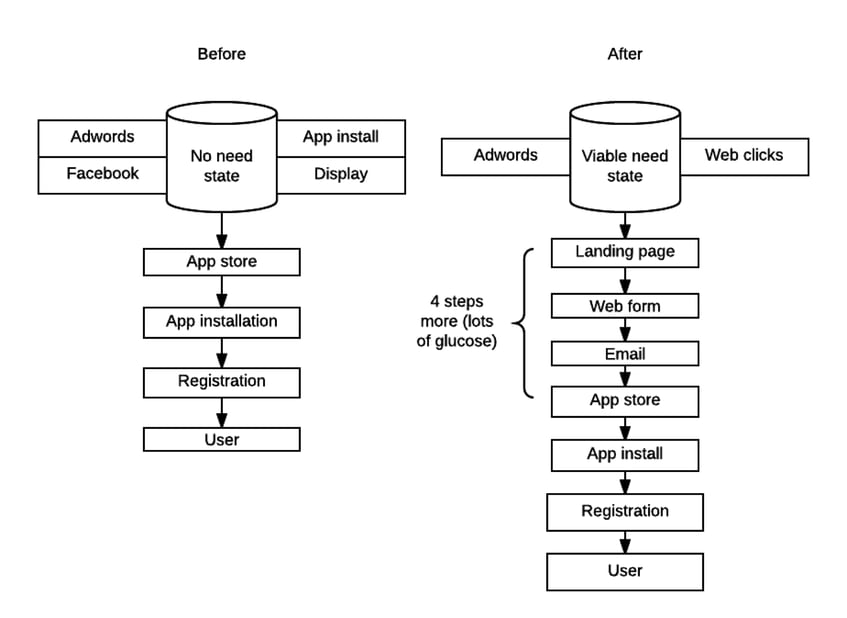

The further away and the more steps required by a user to get their need met, the less conversions you’ll see. A lot of the time, startups fall into the trap that “if users really want it, since it’s so great, they’ll put the energy in”. As per the basic schematic above, the amount of energy required from the user exceeds the amount of energy they are willing to exert, hence resulting in increased cost per acquisition and non-sustainable marketing efforts. From experience, this usually commonly used by putting the product/service benefits behind a ‘painful’ sign up process thinking the website or landing page did a good enough job to educate the user; putting excess security / best practice barriers in place. The above applies to the signup funnel as well as the in-product experience. If the benefit (replace “conversion” with “benefit gained” in above schematic) is outweighed by the amount of effort required from the user, then you won’t see that elusive recurring use. So reducing the total user journey will help conversion? Except – the above didn’t work at all for this startup’s mobile app. Alas – the key element they were missing was solving a genuine need state - their current user journey just was not set up to meet their users’ needs. Although their app install user journey was more direct and shorter, it was considerably less effective. Why was this?

-

With this startup; the way their mobile app was presented is markedly different from their competition and crucially from the current expectation on the user.

-

They were offering a solution to an issue that didn’t exist in the user’s mind, their were offering a new solution to a problem the user wasn’t demanding to be solved in that way.

-

Using the wrong traffic sources. Despite generating consistent mobile app installs, this was not driving engaged customers as they were targeting users who were not in a genuine need state of their service.

-

Furthermore, their mobile app user journey made it too challenging to get visibility in channels that were working. For example, their addressable search volume on AdWords contained a massive long tail of keywords and thus huge untapped traffic volume. Adwords app install campaigns don’t show up nearly as frequently as normal text ads to responsive sites on mobile (try it yourself, type in “restaurants London”, or “Indian in Shoreditch”).

3. Generating actionable insights and recommendations

The next stage is to formulate a prioritised, scalable pipeline of growth marketing opportunities to test and validate their product-market-fit. Instead of trying to re-create everything from scratch when it comes to marketing you want to annihilate the obvious. With this startup, I wanted to focus on the most obvious issues that came out from understanding their current pain-points and mapping out their customer journeys. Here is an overview of the user journeys:

As you can see from comparing the before and after - it’s now a longer funnel! Showing anyone the above diagrams, it would go against their natural inclination and mine too! However it was very deliberately introduced to overcome the main issues that were preventing their short-term growth ambitions: (i) which traffic they were using to source users with a viable need state, and; (ii) presenting a more relevant user journey to solving their need state, in line with their expectation This was also supported by the view also, that a longer activation process that convert betters takes precedent and over time can be refined into a shorter funnel.

Impact and learnings

Whilst recommending to solely focus on Adwords traffic (as a viable source of traffic of users in need state) and move from their sub-optimal app store journey to a web-based landing page to service more addressable traffic, addressed their short-term growth challenges, it was not without some important learnings

Learning 1: Creating a convoluted landing page initial testing plan

Introducing a web-based landing page into their user journey also allowed iterative testing opportunities to optimise their traffic higher up their marketing funnel and create larger potential gains downstream. However in a particular case, here’s where I went wrong and why... So naturally, being the skilful consultant (and a data inquisitive – overly-excessively analytical type) I recommended they go overkill on testing, particularly on their landing page. This proved to be the reasonable and conservative approach but not a relevant and viable route; after all a startup’s key USP is speed. A pertinent quote:

“There are only two sources of competitive advantage: the ability to learn more about our customers faster than the competition and the ability to turn that learning into action faster than the competition.” Jack Welch Former CEO of General Electric

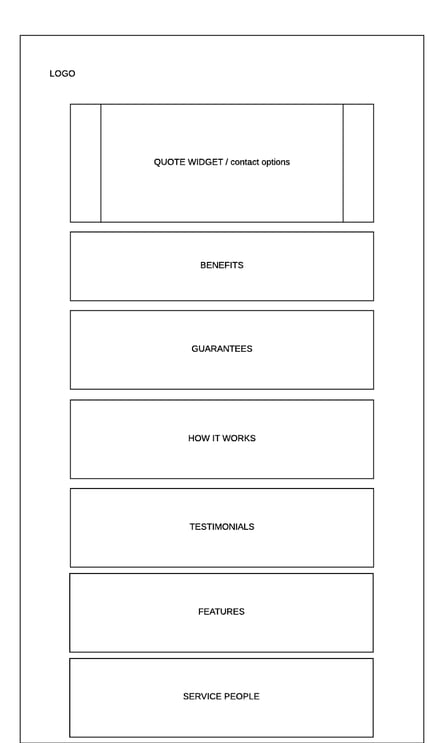

From the competitor user journey analysis, there were many variations in types of content and positioning/order of content that I was interested in testing. This varied from benefit-led messaging to feature-set breakdowns, from three-staged how it works sections to glossy explainer videos, from social proofing using testimonials and 3rd party rating sites, to reinforcement messages such as guarantees. In my haste, I recommended we tested try to test all permutations of content as modules to understand importance, and order to priority in fulfilling the user’s need state and improving conversion. They were able to afford enough traffic from Adwords to generate usable insights and believed this insight would help better understand their positioning in matching their users’ needs.

So, the beautiful piece of work you see above is the schematic structure for the recommended wireframe for the landing page. In order to execute against this complex Multivariate test (MVT), we ended up messing around a lot with third party tools such as Wufoo / Unbounce / Typeform etc’ until we realised the level of customising flexibility we wanted was best served via WordPress (luckily enough the CTO was a wiz with it). What we built was a multi-variate testing platform that took into account the order of the modules, customising them and addition/removal of them. This ended up taking a lot of time to spec and build. However we soon learned that this was a lot of wasted effort, as it was clear from using Crazy Egg (heatmaps visualization software) that +90% of users didn’t even bother scrolling down their landing page. So the order of the modules simply didn’t matter to the user. The big learning here was that it’s better to iteratively test a multitude of channels and ideas rather than jump to a solution that tries to solve all issues at once. We were left several weeks into designing and launching a testing platform with no further insight nor improved conversion performance to speak of. An easy method to do this is to simply replicate the different competitor funnels to discover which is most effective before assuming one of them isn’t right of your product or service. For this client, a great example of this is displaying a phone number on a landing page versus a lead form. There’s learnings in terms of the customer’s journey at the very least from doing this.

Learning 2 : Missing the obvious

In order to improve the activation of user from a web form into using their service, I focused on testing a number of different approaches. I recorded the number of touchpoints through the startup’s different options for separate funnel touch points.

| Activation funnel | Number of steps | Level of user commitment (3-high, 1-low) |

| Inbound Phone Call | 1-2 | 3 |

| Automated SMS/Text | 1-2 | 2 |

| Automated Email | 1-3 | 2 |

| Online form | 5 | 1 |

What was important in the process was evaluating user attrition through complex funnels – there is a sweet spot between user commitment and conversion which is specific to each industry. The only way to uncover it is through an exploration and progressive experimentation. Weeks later, there was something quite embarrassing that was left out, which you’d think a marketer such as myself would have thought about. Common trends in society. Specifically for this startup, I’m talking about the proliferation of live chat. Not advanced AI chatbots, just the straightforward website chat box we are now accustomed to. By implementing live chat for their customer service team to help their users sooner, there was a 4x increase in lead acquisition on the chat pop-up versus any of the above form tests we had conducted previously.

Key takeaways

So how did this tech startup find itself in this situation and how could it be avoided in the future?

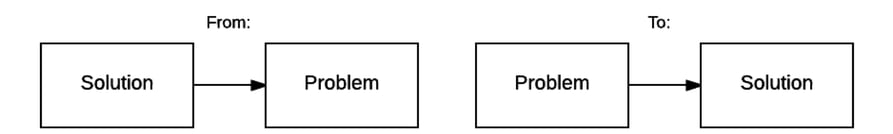

1. Focus on the solution, rather than the problem = Lack of product/market fit

In this particular case, it was clear this startup attempted to come up with the perfect solution, too soon before really understanding the problem it’s trying to solve. The thinking was back-to-front and lead to huge problems from growing their business.

The reason this can often happen is simple; getting tech talent is difficult. So the thinking goes “let's get the tech talent and build something that we think the market wants. We'll figure it out as we go along.” There are a few issues here:

-

Once there's a tech and product team moving full-steam ahead it, makes sense to start building something and iterating. The problem here is that once something is built there is a natural bias towards pushing what's been built, rather than what the market wants.

-

Now with higher burn - regardless of it sitting on an internal P&L or Angel funding there is more pressure to deliver an effective return, creating a short term cultural mentality that often results in a scattergun approach to problem-solving, rather than what's necessary which is deep thinking, insight and experimentation.

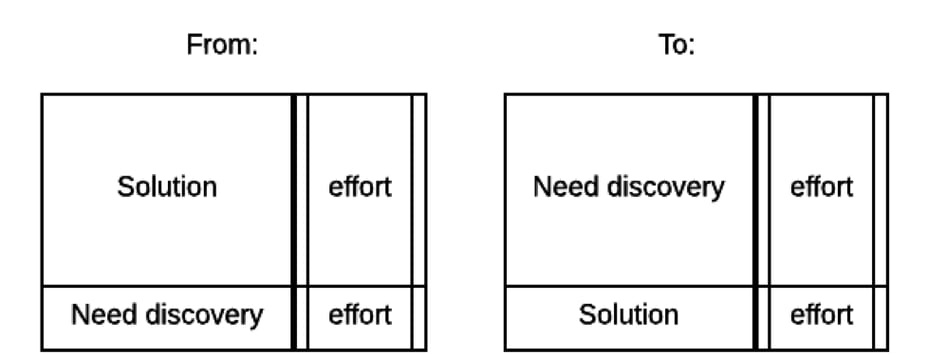

The solution here isn’t simple. What others in the growth community have originated with as a solution is minimum viable product. That is to say what can you fake as real, rather than invest a lot of money into building the real thing and finding out no one wants it. What I’m proposing is a shift in effort and attention to more in-depth exploration with less assumptions and a more rigorous methodology for testing ideas.

Once needs is established and clear - then it’s time to shift more effort into the solution.

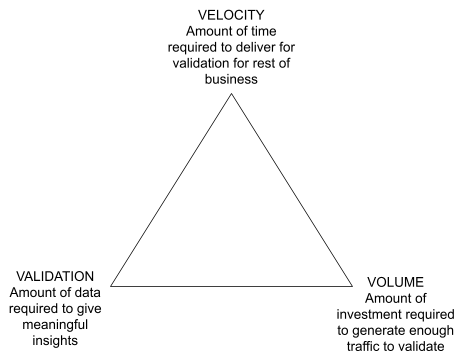

2. Conflict between velocity, volume & validation

A/B split testing and assumption verifications are a luxury when you are driving large volumes of traffic. Due to limited marketing budget to invest in delivering suitable volume of traffic to gain proper statistical validation in a useful time frame to keep up with the velocity of the rest of the business. In nearly all my experience of startups, I experience one of three scenarios which inhibit the opportunity to gain meaningful insights from:

-

Too slow - limited resources and budgets resulting in it taking too long to generate enough volume of test users to gain meaningful insights.

-

Too incomplete - incorrect conclusions emerge from rushing testing, based on an unrealistic expectation on the amount of time it takes to gain meaningful insights.

-

Too complex - trying to answer too much hypotheses and therefore testing too many variables at once - resulting in a need for large amounts of traffic to gain meaningful insights from.

The result here is incorrect conclusions are drawn based on minimal amounts of data and potentially risk of filling in the blanks too much based on intuition. In my opinion, in some cases it’s arguably riskier and more distracting to go through a sub-optimal testing process than to just ignore data and run with your intuition. Therefore the focus needs to be creating simple tests that have the greatest impact. It is valuable habit and mentality to adopt. The simple rules of thumb here are:

-

Test radically different assumptions to maximise the value of the insight and generate the most varied response in performance.

-

Funnels

-

Landing pages

-

Marketing channels

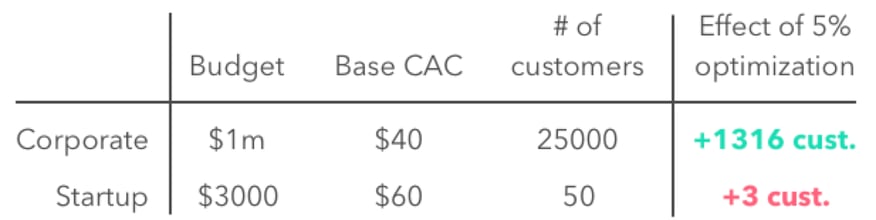

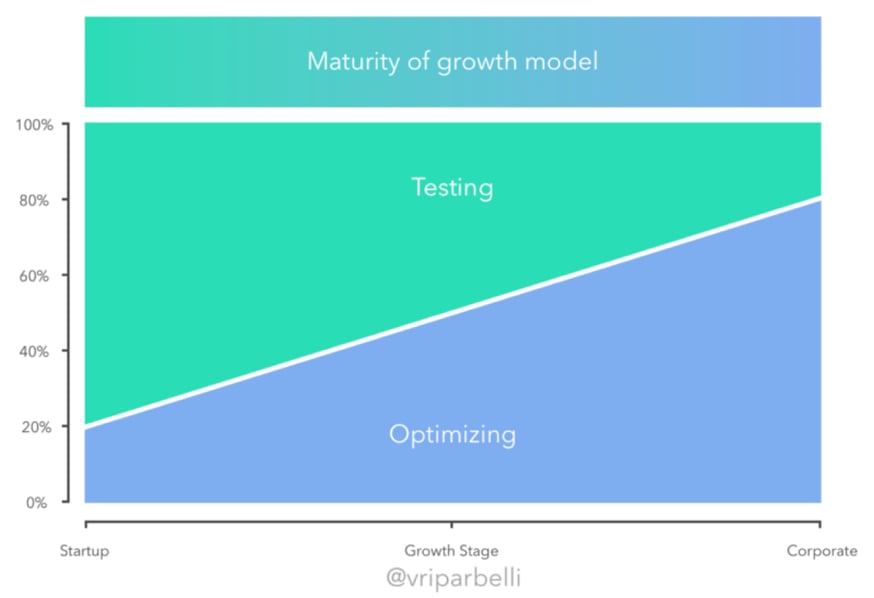

3. Optimising too soon

Furthermore, focusing too much on optimisation too early is a trap I’ve seen startups fall into too often. Often it’s caused by deciding what you’re doing is right and therefore trying to squeeze incremental improvements on a single conversion metric. In the case of this startup, it was trying to optimise a user journey that was inherently flawed to begin with. The below table highlights the comparative impact of a generating a 5% improvement in conversion through optimisation. Compared to a corporate, generating a 5% uplift has a somewhat negligible uplift in performance (in this example, just 3 additional customers). This is based on the simply fact that the scale at which each company is operating is completely different.

Rather than focusing too early on optimisation, it’s worth doubling-down efforts on channels which are proving to deliver some traction.

Final thoughts

As a marketer, often supporting as an outsourced CMO or interim marketing director, I’ve had the opportunity to experience the pain of startups and seen where it has gone wrong, whilst burning millions in the process. This recent startup was no different in some of the pitfalls it fell into. To conclude I would generally summarise these into the following areas:

-

Audience - Not defining, refining or engaging the right customer type.

-

Marketing - Focusing on trying to make the wrong marketing channels work and conversely, doing the right marketing channels, wrongly.

-

Conversion - Marketing funnel mishaps. Focusing too deeply on gain incremental gain that have negligible impact on the business.

-

Data & Attribution - Tracking and technical challenges with regards to marketing reporting and insights.

-

Short-termism - Focusing on creating overnight successes. Creating a culturally induced hyper-focus/myopia due to high intensity pressure, particularly with investment.

-

Lack of product fit - Relying on marketing spend to compensate for a poor product/market fit.

-

Product-centric - Too much focus on own their product vs. customer journey. Falling into the ”next feature fallacy” trap.

-

Chasing ‘Silver bullet’ solutions - Not understanding the core marketer skillset and being unduly swayed by popular concepts.