Mastering Mobile App A/B Testing: Boost Your App Store Listing's Performance

In the hyper-competitive app marketplace, understanding your audience and optimizing your App Store listing is key to success. One method that can turn the tide in your favor is Mobile App A/B Testing.

Storemaven says well-executed A/B tests can boost your organic download rates. But what does it mean, and how can it boost your app store product page's performance?

As experts in App Store Optimisation, we at Kurve have dissected how the ASO process works. This article provides the step-by-step process of conducting effective A/B testing for your App Store listing. We'll help you understand the nuances of A/B testing and how it can inform your decision-making process, leading to an optimized App Store listing that drives user acquisition.

Embark on this journey to master Mobile App A/B testing. Get ready to transform your app's performance through data-driven insights. Visit our mobile app services to learn more about how Kurve can assist you.

What is Mobile App A/B Testing?

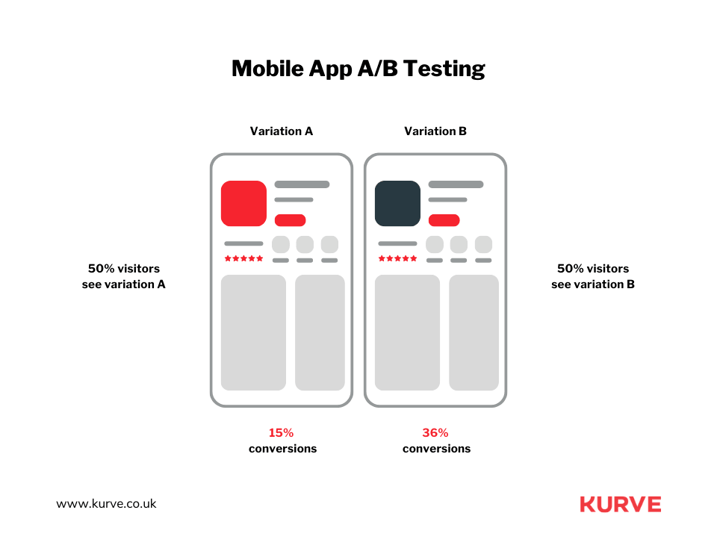

Mobile App A/B Testing, or split testing, allows you to experiment with different versions of your app store listing to determine which resonates best with your target audience.

It's like conducting a virtual focus group. You can test different elements of your app's store listing - screenshots, app icons, titles, or descriptions - to optimize the user experience and conversion rates.

You create two or more versions of your listing, each with subtle design, content, or structure changes. These versions are then shown to a similar audience segment. You can identify which version is most effective by analyzing the performance metrics like click-through rates, downloads or in-app purchases.

Does A/B Testing Work in the App Store?

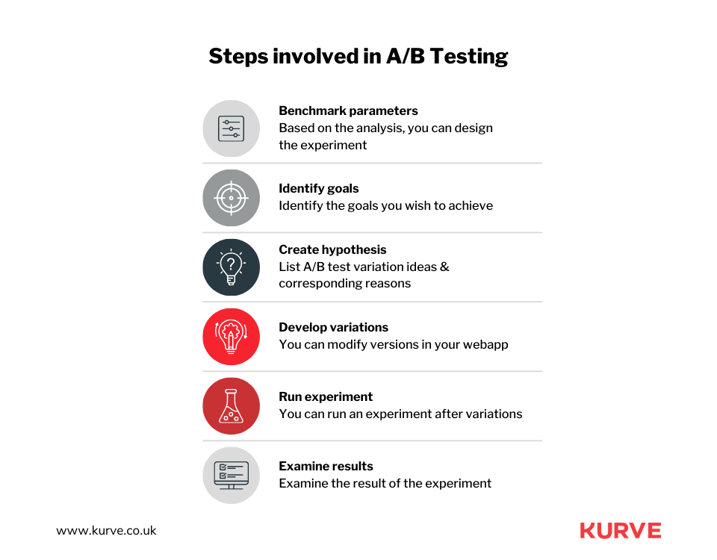

App Store A/B testing involves intricate steps, each with unique importance.

Here’s a simple guide on how to perform an A/B test:

- Define Your Objective: Determine what you wish to achieve from the A/B test. It could be increasing the click-through rate, boosting downloads, or improving user engagement.

- Create Variations: Develop two or more variations of the app listing. These might involve a title, description, screenshots, or icon changes. Each variation aims to test a specific hypothesis related to user behavior.

- Test the Variants: Present the different versions to a similar user segment over a defined period. It's essential only to test one change at a time to accurately attribute any performance shifts to the tested variable.

- Analyze Results: Measure the performance of each variant using relevant metrics and determine the winner.

It's crucial to note that Apple's App Store has specific rules surrounding A/B testing. Direct A/B testing of the app store listing is not supported, unlike Google Play Store.

As such, mobile marketing experts at Kurve navigate these restrictions by leveraging internal and external research and platforms to test hypotheses and gather data before implementing the winning variant in the app store listing. This meticulous approach ensures full compliance with App Store policies while maximizing your app's potential for success.

Mobile A/B Testing: Step-by-Step

Navigating the complex landscape of mobile A/B testing can seem daunting. But with a step-by-step approach, you can optimize your app's store presence and boost conversion rates. The process involves a series of methodical steps that begin with diligent research and culminate in implementing the findings.

Throughout this guide, we'll offer insightful strategies and practical techniques that have proven successful for many apps. From forming a robust hypothesis to conducting a thorough analysis and follow-up, this guide is a roadmap to success in A/B testing.

Now, let's take a closer look at each step, ensuring you're fully equipped to make informed decisions that drive tangible results for your app's success.

Step 1: Research

The path to effective A/B testing begins with comprehensive research. Start by identifying your app's target demographic. Understand their preferences, behaviors, and needs - all crucial elements in creating an enticing app store listing.

Employ the power of analytic tools to gather data on user interactions and scrutinize your app store metrics. Search for patterns and trends in app analytics and collect data that may reveal valuable insights into user behavior.

Don't forget your competitors. Examine their strategies and evaluate what's working for them. Also, identify where they're falling short. The goal here is to gain an in-depth understanding of the competitive landscape and the expectations of your potential users. Accumulate as much data as possible. It will inform your decisions about which aspects of your app store listing to test.

Step 2: Form a Strong Hypothesis

After your research, the next step is to craft a robust hypothesis. This is an educated prediction of your A/B test's outcome. It proposes a cause-effect relationship that you can measure and test.

Consider a hypothesis: "If we change our app icon to a more minimalistic design, we anticipate an increase in app downloads." In this statement, the change is specific (the design of the app icon), and the anticipated outcome is defined (an increase in downloads).

A solid, well-defined hypothesis is vital for effective A/B testing. It will guide your test, and the results will validate or invalidate your prediction. Either way, you gain valuable insights for future optimizations.

Step 3: Traffic

Understanding your traffic is critical to successful A/B testing. Firstly, identify where your users are coming from. Are they finding your app through organic search results, paid ads, or direct referrals?

Analyze the behavioral patterns of these traffic segments, including their app interactions, response to your listing, and conversion rates.

You need a substantial amount of traffic to ensure statistical validity. Ensure you have enough users participating in the test to draw meaningful conclusions. If your app's traffic is relatively low, consider extending the testing period to capture more data.

Step 4: Create Your Variation

Now comes the creative part of the process - designing the test variations for your test. Based on your hypothesis, decide what aspects of your product page optimization and app store listing you'll change.

Are you testing the app's title, icon, screenshots, default product page, or description? Make the alterations considering your target audience's preferences and the insights from your competitor analysis.

Ensure that the changes are significant enough to impact user behavior, but avoid changing too many elements too fast. This could make it challenging to identify which change caused any observed effects.

Once the variant is ready, you're set to launch your A/B test. As users engage with your app, track the data, and always be ready to draw insights and adapt your approach.

Step 5: Run the Experiments

Getting your experiment off the ground is critical in the A/B testing process. Start by dividing your audience into two groups.

- One segment of your audience, known as the control group, will see the original version of your app store listing.

- The other segment, the test group, will see the variation you created. This group ensures a balanced comparison between the two versions, mitigating any potential bias in your results.

Throughout the testing period, it's crucial to keep a watchful eye on the various experiment results. But don't rush to conclude - ensure that your test runs for a significant duration until you reach statistical significance. More data guarantees that the results you see aren't due to random chance. Patience is vital here; meaningful patterns and insights often take time to emerge.

Step 6: Conduct Analysis and Implementation

Once your experiment concludes, the next step is to gather your data for analysis. Dive into the metrics to determine which version - original or variation - resonated better with your audience.

Ask the crucial questions: did the variation lead to more downloads? Did it result in users spending more time on your app or perhaps even leaving better reviews?

If your data indicates that the variant was more successful than the original, it's time to consider implementing the changes in your app store listing. However, remember that not every test will produce groundbreaking results.

Even if the test begins and your variant does not outperform the original, there's no need for disappointment. Regardless of its outcome, each test offers valuable insights about your target audience and their preferences. Use this data to refine your strategies further.

Step 7: Follow Up

The final step in the A/B testing process is the follow-up.

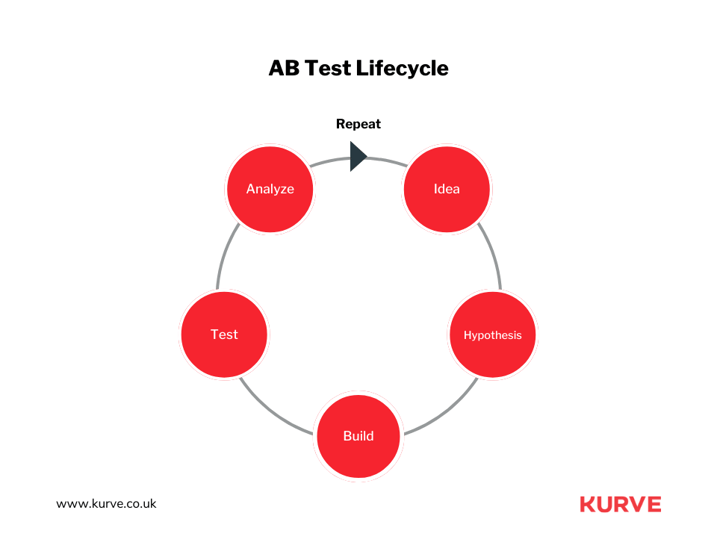

Understanding that A/B testing isn't a one-off process you can set and forget is crucial. The mobile app landscape is ever-evolving, with user preferences, competitor strategies, and industry trends changing continually. Staying relevant and competitive requires you to be on top of these changes.

Make it a habit to run follow-up tests after every A/B test. This could involve testing another feature of your app store listing or refining the previous test based on the insights gathered.

Each iteration of A/B testing brings you closer to perfecting your app's presence on the store and improving user experience. Persistence and continuous learning are the keys to boosting your app store product page's performance.

Best Practices for A/B Testing on the App Store

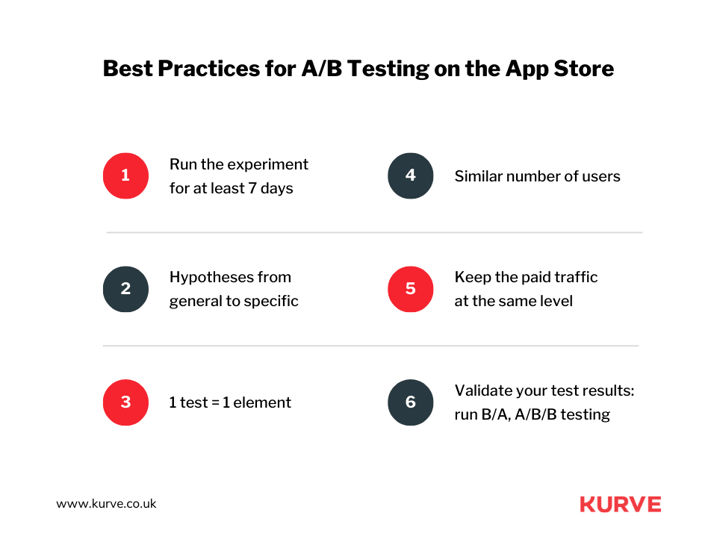

Successful A/B testing hinges on more than just following a series of steps. The tactics you deploy during your testing process can make a world of difference to the results you obtain.

This section will enlighten you about the best practices that maximize the potential of your A/B testing efforts. These practices are about adopting a data-driven mindset, making informed decisions, and optimizing your app store listing for peak performance.

So let's gear up to explore these game-changing practices for A/B testing on the App Store.

Set up your tests based on the strength of your hypothesis

When conducting A/B testing, the strength of your hypothesis can significantly impact the success of your test. Creating a solid, data-backed hypothesis that addresses a specific concern or goal is essential.

When your hypothesis is concrete and well-founded, it directs your A/B testing toward a clear, measurable outcome. This helps ensure that your tests are meaningful and their results are actionable.

Invest adequate time in crafting your hypothesis. Use data, user insights, and industry trends to give your hypothesis the power to drive your A/B testing plan.

Do not replicate Google Play’s A/B strategy for iOS

It's a common misconception that what works for Google Play will work for the App Store. Yet, each platform has unique guidelines, user behaviors and algorithmic nuances that affect how you conduct your A/B testing. Implementing the same strategy across both platforms might not yield the expected results.

For example, the App Store allows you to A/B test only through Apple Search Ads, whereas Google Play has built-in A/B testing functionality. These differences should inform your testing strategy for each platform to gain the most effective results.

Do not be in a hurry to end the test too early

Patience is critical in A/B testing. Tests need to run for a significant duration to yield accurate, meaningful results. If you're too quick to conclude your test, you may base your decisions on incomplete or skewed data.

Typically, a test should run for at least 7-14 days, depending on the traffic your app receives. During this period, monitoring the test and making no changes to your app store listing is vital to avoid influencing the results.

Only conclude your test when you have enough data to make a reliable, informed decision.

Look out for external factors that can impact installs

In A/B testing, it's essential to recognize the external factors that could influence the number of app installs. These factors can range from seasonal trends, marketing campaigns to updates in your app's features or even competitor activities.

For example, a holiday season might spur an increase in app installs due to users downloading new apps for leisure. Alternatively, a promotional campaign running simultaneously with your test could artificially inflate your install rates.

Monitoring these factors ensures that your A/B test results reflect the actual impact of your listing changes rather than external influences. To ensure accuracy, always account for these variables when analyzing your A/B test results.

Optimize for the right audience

Your app store listing should cater to the preferences of your target audience for it to drive installs. This principle holds even when you're conducting A/B testing.

Consider who your app is meant for and what they value. Do they prefer a minimalist aesthetic, or are they attracted to vibrant colors and animation? What language do they use, and what benefits are they seeking from your app?

When setting up your test variants, keep these audience preferences in mind. By tailoring your A/B tests to your target audience's preferences, you'll be able to uncover the changes that truly resonate with them and encourage them to install your new app version.

Test one asset at a time

Running an A/B test involves comparing two or more versions of your app store listing to determine which performs best. Changing only one element at a time is critical to get accurate results.

If you change multiple components between versions, such as the app icon and the first screenshot, it becomes difficult to attribute any differences in performance to a specific change. Was it the new icon or the screenshot that increased the install rate?

Testing one asset at a time allows you to isolate the effect of each change. It also provides clearer insights into what works and what doesn't for your app store listing. It's a meticulous process, but the precise results you gain are worth the effort.

Iterative testing

A/B testing isn't a one-time event but an ongoing process of refinement and improvement. Iterative testing entails conducting a series of tests, with each one building on the learnings from the previous ones.

Once you've concluded an A/B test and analyzed the results, use those insights to inform your next test. If changing the color scheme of your app icon led to more installs, could tweaking the design further improve results? Or should you now focus on another asset, like your screenshots or description?

Iterative testing allows you to enhance your app store listing, driving incremental increases in your conversion rate over time.

Final Thoughts

The power of A/B testing in the world of app store listings is genuinely phenomenal.

It's the secret weapon that allows you to step into your users' shoes, understand their preferences, and refine your app's presence to meet their needs. Mastering A/B testing is an ongoing process, but with each step, you come closer to achieving your app's potential.

When you're ready to delve deep into A/B testing, we at KURVE are here to assist you. Our expert team, adept at app marketing strategies and A/B testing, can help you optimize your app listing for maximum visibility and user engagement.

We encourage you to explore our App Campaign Optimization Guide. This comprehensive resource will equip you with the necessary knowledge to leverage A/B testing effectively, allowing you to increase downloads and drive your app's success on the App Store.

Your journey toward mastering the art of A/B testing starts here.